Lars Hörmander, who made fundamental contributions to all areas of partial differential equations, but particularly in developing the analysis of variable-coefficient linear PDE,

died last Sunday, aged 81.

I unfortunately never met Hörmander personally, but of course I encountered his work all the time while working in PDE. One of his major contributions to the subject was to systematically develop the calculus of

Fourier integral operators (FIOs), which are a substantial generalisation of pseudodifferential operators and which can be used to (approximately) solve linear partial differential equations, or to transform such equations into a more convenient form. Roughly speaking, Fourier integral operators are to linear PDE as

canonical transformations are to

Hamiltonian mechanics (and one can in fact view FIOs as a quantisation of a canonical transformation). They are a large class of transformations, for instance the Fourier transform, pseudodifferential operators, and smooth changes of the spatial variable are all examples of FIOs, and (as long as certain singular situations are avoided) the composition of two FIOs is again an FIO.

The full theory of FIOs is quite extensive, occupying

the entire final volume ofHormander’s famous four-volume series “The Analysis of Linear Partial Differential Operators”. I am certainly not going to try to attempt to summarise it here, but I thought I would try to motivate how these operators arise when trying to transform functions. For simplicity we will work with functions

on a Euclidean domain

(although FIOs can certainly be defined on more general smooth manifolds, and there is an extension of the theory that also works on manifolds with boundary). As this will be a heuristic discussion, we will ignore all the (technical, but important) issues of smoothness or convergence with regards to the functions, integrals and limits that appear below, and be rather vague with terms such as “decaying” or “concentrated”.

A function

can be viewed from many different perspectives (reflecting the variety of bases, or approximate bases, that the Hilbert space

offers). Most directly, we have the

physical space perspective, viewing

as a function

of the physical variable

. In many cases, this function will be concentrated in some subregion

of physical space. For instance, a gaussian wave packet

where

,

and

are parameters, would be physically concentrated in the ball

. Then we have the

frequency space (or momentum space) perspective, viewing

now as a function

of the frequency variable

. For this discussion, it will be convenient to normalise the Fourier transform using a small constant

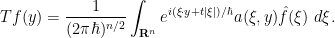

(which has the physical interpretation of

Planck’s constant if one is doing quantum mechanics), thus

For instance, for the gaussian wave packet

(1), one has

and so we see that

is concentrated in frequency space in the ball

.

However, there is a third (but less rigorous) way to view a function

in

, which is the

phase space perspective in which one tries to view

as distributed simultaneously in physical space and in frequency space, thus being something like a measure on the

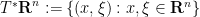

phase space

. Thus, for instance, the function

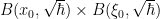

(1) should heuristically be concentrated on the region

in phase space. Unfortunately, due to the uncertainty principle, there is no completely satisfactory way to canonically and rigorously define what the “phase space portrait” of a function

should be. (For instance, the

Wigner transform of

can be viewed as an attempt to describe the distribution of the

energy of

in phase space, except that this transform can take negative or even complex values; see

Folland’s book for further discussion.) Still, it is a very useful heuristic to think of functions has having a phase space portrait, which is something like a non-negative measure on phase space that captures the distribution of functions in both space and frequency, albeit with some “quantum fuzziness” that shows up whenever one tries to inspect this measure at scales of physical space and frequency space that together violate the uncertainty principle. (The score of a piece of music is a good everyday example of a phase space portrait of a function, in this case a sound wave; here, the physical space is the time axis (the horizontal dimension of the score) and the frequency space is the vertical dimension. Here, the time and frequency scales involved are well above the uncertainty principle limit (a typical note lasts many hundreds of cycles, whereas the uncertainty principle kicks in at

cycles) and so there is no obstruction here to musical notation being unambiguous.) Furthermore, if one takes certain asymptotic limits, one can recover a precise notion of a phase space portrait; for instance if one takes the

semiclassical limit

then, under certain circumstances, the phase space portrait converges to a well-defined classical probability measure on phase space; closely related to this is the

high frequency limit of a fixed function, which among other things defines the

wave front set of that function, which can be viewed as another asymptotic realisation of the phase space portrait concept.

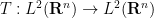

If functions in

can be viewed as a sort of distribution in phase space, then linear operators

should be viewed as various transformations on such distributions on phase space. For instance, a pseudodifferential operator

should correspond (as a zeroth approximation) to multiplying a phase space distribution by the symbol

of that operator, as discussed in

this previous blog post. Note that such operators only change the amplitude of the phase space distribution, but not the support of that distribution.

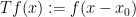

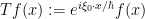

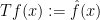

Now we turn to operators that alter the support of a phase space distribution, rather than the amplitude; we will focus on unitary operators to emphasise the amplitude preservation aspect. These will eventually be key examples of Fourier integral operators. A physical translation

should correspond to pushing forward the distribution by the transformation

, as can be seen by comparing the physical and frequency space supports of

with that of

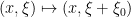

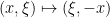

. Similarly, a frequency modulation

should correspond to the transformation

; a linear change of variables

, where

is an invertible linear transformation, should correspond to

; and finally, the Fourier transform

should correspond to the transformation

.

Based on these examples, one may hope that given any diffeomorphism

of phase space, one could associate some sort of unitary (or approximately unitary) operator

, which (heuristically, at least) pushes the phase space portrait of a function forward by

. However, there is an obstruction to doing so, which can be explained as follows. If

pushes phase space portraits by

, and pseudodifferential operators

multiply phase space portraits by

, then this suggests the intertwining relationship

and thus

is approximately conjugate to

:

Applying commutators, we conclude the approximate conjugacy relationship

Now, the pseudodifferential calculus (as discussed in

this previous post) tells us (heuristically, at least) that

and

where

is the Poisson bracket. Comparing this with

(2), we are then led to the compatibility condition

thus

needs to preserve (approximately, at least) the Poisson bracket, or equivalently

needs to be a

symplectomorphism (again, approximately at least).

Now suppose that

is a symplectomorphism. This is morally equivalent to the graph

being a

Lagrangian submanifold of

(where we give the second copy of phase space the negative

of the usual symplectic form

, thus yielding

as the full symplectic form on

; this is another instantiation of the closed graph theorem, as mentioned in

this previous post. This graph is known as the

canonical relation for the (putative) FIO that is associated to

. To understand what it means for this graph to be Lagrangian, we coordinatise

as

suppose temporarily that this graph was (locally, at least) a smooth graph in the

and

variables, thus

for some smooth functions

. A brief computation shows that the Lagrangian property of

is then equivalent to the compatibility conditions

for

, where

denote the components of

. Some Fourier analysis (or Hodge theory) lets us solve these equations as

for some smooth potential function

. Thus, we have parameterised our graph

as

A reasonable candidate for an operator associated to

and

in this fashion is the oscillatory integral operator

for some smooth amplitude function

(note that the Fourier transform is the special case when

and

, which helps explain the genesis of the term “Fourier integral operator”). Indeed, if one computes an inner product

for gaussian wave packets

of the form

(1) and localised in phase space near

respectively, then a Taylor expansion of

around

, followed by a

stationary phase computation, shows (again heuristically, and assuming

is suitably non-degenerate) that

has

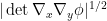

(3) as its canonical relation. (Furthermore, a refinement of this stationary phase calculation suggests that if

is normalised to be the

half-density

, then

should be approximately unitary.) As such, we view

(4) as an example of a Fourier integral operator (assuming various smoothness and non-degeneracy hypotheses on the phase

and amplitude

which we do not detail here).

Of course, it may be the case that

is not a graph in the

coordinates (for instance, the key examples of translation, modulation, and dilation are not of this form), but then it is often a graph in some other pair of coordinates, such as

. In that case one can compose the oscillatory integral construction given above with a Fourier transform, giving another class of FIOs of the form

This class of FIOs covers many important cases; for instance, the translation, modulation, and dilation operators considered earlier can be written in this form after some Fourier analysis. Another typical example is the half-wave propagator

for some time

, which can be written in the form

This corresponds to the phase space transformation

, which can be viewed as the classical propagator associated to the “quantum” propagator

. More generally, propagators for linear Hamiltonian partial differential equations can often be expressed (at least approximately) by Fourier integral operators corresponding to the propagator of the associated

classical Hamiltonian flow associated to the symbol of the Hamiltonian operator

; this leads to an important mathematical formalisation of the

correspondence principle between quantum mechanics and classical mechanics, that is one of the foundations of microlocal analysis and which was extensively developed in Hörmander’s work. (More recently, numerically stable versions of this theory have been developed to allow for rapid and accurate numerical solutions to various linear PDE, for instance through Emmanuel Candés’ theory of

curvelets, so the theory that Hörmander built now has some quite significant practical applications in areas such as geology.)

In some cases, the canonical relation

may have some singularities (such as fold singularities) which prevent it from being written as graphs in the previous senses, but the theory for defining FIOs even in these cases, and in developing their calculus, is now well established, in large part due to the foundational work of Hörmander.

A música é das melhores coisas do Mundo. Conforta, anima e amansa a tristeza. Está em todo o lado e nasce espontaneamente. Ao vivo, arrepia e torna-se inesquecível.

A música é das melhores coisas do Mundo. Conforta, anima e amansa a tristeza. Está em todo o lado e nasce espontaneamente. Ao vivo, arrepia e torna-se inesquecível.

: "Crammed with all the natural goodness of wholegrain, you can almost taste the long hot summers and gentle spring rain in every bite, resulting in the softest, plumpest grain imaginable." Doesn't that just make you want to fuck?

: "Crammed with all the natural goodness of wholegrain, you can almost taste the long hot summers and gentle spring rain in every bite, resulting in the softest, plumpest grain imaginable." Doesn't that just make you want to fuck?

A música é das melhores coisas do Mundo. Conforta, anima e amansa a tristeza. Está em todo o lado e nasce espontaneamente. Ao vivo, arrepia e torna-se inesquecível.

A música é das melhores coisas do Mundo. Conforta, anima e amansa a tristeza. Está em todo o lado e nasce espontaneamente. Ao vivo, arrepia e torna-se inesquecível.

: "Crammed with all the natural goodness of wholegrain, you can almost taste the long hot summers and gentle spring rain in every bite, resulting in the softest, plumpest grain imaginable." Doesn't that just make you want to fuck?

: "Crammed with all the natural goodness of wholegrain, you can almost taste the long hot summers and gentle spring rain in every bite, resulting in the softest, plumpest grain imaginable." Doesn't that just make you want to fuck?